377 MPI_Ibsend(MPI_BOTTOM, 1, MPItypeSiL,

rank_il, 100, comm_cart ,&req[exNb++]);

378 MPI_Ibsend(MPI_BOTTOM, 1, MPItypeSiU,

rank_iu, 200, comm_cart, &req[exNb++] );

382 MPI_Isend(MPI_BOTTOM, 1, MPItypeSiL,

rank_il, 100, comm_cart,&req[exNb++]);

383 MPI_Isend(MPI_BOTTOM, 1, MPItypeSiU,

rank_iu, 200, comm_cart,&req[exNb++]);

387 MPI_Issend(MPI_BOTTOM, 1, MPItypeSiL,

rank_il, 100, comm_cart,&req[exNb++]);

388 MPI_Issend(MPI_BOTTOM, 1, MPItypeSiU,

rank_iu, 200, comm_cart,&req[exNb++]);

395 MPI_Ibsend(MPI_BOTTOM, 1, MPItypeSjL,

rank_jl, 300, comm_cart,&req[exNb++]);

396 MPI_Ibsend(MPI_BOTTOM, 1, MPItypeSjU,

rank_ju, 400, comm_cart,&req[exNb++]);

400 MPI_Isend(MPI_BOTTOM, 1, MPItypeSjL,

rank_jl, 300, comm_cart,&req[exNb++]);

401 MPI_Isend(MPI_BOTTOM, 1, MPItypeSjU,

rank_ju, 400, comm_cart,&req[exNb++]);

405 MPI_Issend(MPI_BOTTOM, 1, MPItypeSjL,

rank_jl, 300, comm_cart,&req[exNb++]);

406 MPI_Issend(MPI_BOTTOM, 1, MPItypeSjU,

rank_ju, 400, comm_cart,&req[exNb++]);

414 MPI_Ibsend(MPI_BOTTOM, 1, MPItypeSkL,

rank_kl, 500, comm_cart,&req[exNb++]);

415 MPI_Ibsend(MPI_BOTTOM, 1, MPItypeSkU,

rank_ku, 600, comm_cart,&req[exNb++]);

419 MPI_Isend(MPI_BOTTOM, 1, MPItypeSkL,

rank_kl, 500, comm_cart,&req[exNb++]);

420 MPI_Isend(MPI_BOTTOM, 1, MPItypeSkU,

rank_ku, 600, comm_cart,&req[exNb++]);

424 MPI_Issend(MPI_BOTTOM, 1, MPItypeSkL,

rank_kl, 500, comm_cart,&req[exNb++]);

425 MPI_Issend(MPI_BOTTOM, 1, MPItypeSkU,

rank_ku, 600, comm_cart,&req[exNb++]);

433 MPI_Recv(MPI_BOTTOM, 1, MPItypeRiL,

rank_il, 200, comm_cart, &st[6]);

434 MPI_Recv(MPI_BOTTOM, 1, MPItypeRiU,

rank_iu, 100, comm_cart, &st[7]);

437 MPI_Irecv(MPI_BOTTOM, 1, MPItypeRiL,

rank_il, 200, comm_cart, &req[exNb++]);

438 MPI_Irecv(MPI_BOTTOM, 1, MPItypeRiU,

rank_iu, 100, comm_cart, &req[exNb++]);

443 MPI_Recv(MPI_BOTTOM, 1, MPItypeRjL,

rank_jl, 400, comm_cart, &st[8]);

444 MPI_Recv(MPI_BOTTOM, 1, MPItypeRjU,

rank_ju, 300, comm_cart, &st[9]);

447 MPI_Irecv(MPI_BOTTOM, 1, MPItypeRjL,

rank_jl, 400, comm_cart, &req[exNb++]);

448 MPI_Irecv(MPI_BOTTOM, 1, MPItypeRjU,

rank_ju, 300, comm_cart, &req[exNb++]);

454 MPI_Recv(MPI_BOTTOM, 1, MPItypeRkL,

rank_kl, 600, comm_cart, &st[10]);

455 MPI_Recv(MPI_BOTTOM, 1, MPItypeRkU,

rank_ku, 500, comm_cart, &st[11]);

458 MPI_Irecv(MPI_BOTTOM, 1, MPItypeRkL,

rank_kl, 600, comm_cart, &req[exNb++]);

459 MPI_Irecv(MPI_BOTTOM, 1, MPItypeRkU,

rank_ku, 500, comm_cart, &req[exNb++]);

int MPIRecvType

Definition: Parameters.cpp:239

double stop()

Stop timer and, if asked, returns CPU time from previous start in seconds.

Definition: Timer.cpp:77

int Dimension

Definition: Parameters.cpp:74

void FreeMPIType()

Parallel function DOES NOT WORK!

Definition: Parallel.cpp:247

int rank_ku

Definition: Parameters.cpp:245

int MPISendType

Definition: Parameters.cpp:238

int rank_il

Definition: Parameters.cpp:243

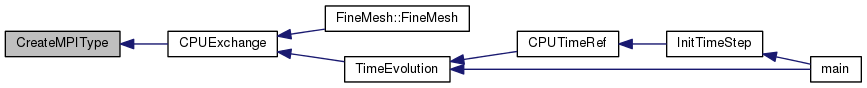

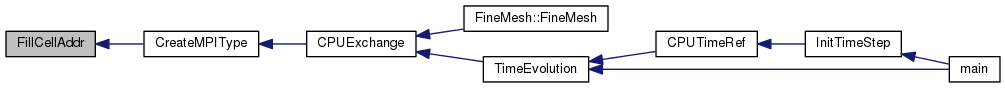

void CreateMPIType(FineMesh *Root)

Definition: Parallel.cpp:121

int WhatSend

Definition: Parameters.cpp:247

Timer CommTimer

Definition: Parameters.cpp:241

void start()

Starts timer.

Definition: Timer.cpp:62

int rank_jl

Definition: Parameters.cpp:244

int rank_ju

Definition: Parameters.cpp:244

int rank_iu

Definition: Parameters.cpp:243

int CellElementsNb

Definition: Parameters.cpp:233

int rank_kl

Definition: Parameters.cpp:245

1.8.6

1.8.6